Guide to Decoding Research Studies

Spot the studies that stand out and those that need more proof.

Welcome to Fed—I’m thrilled you’re here! This is your go-to resource for nutrition questions and controversies. Think of me as your tutor—here to break down the noise, offer context, and help make sense of it all.

Understanding that nutrition research is complex, it's essential to assess and categorize studies so you know how to weigh them. For example, does a study on peri-menopausal women who consumed cabbage daily for two months—and reported fewer hot flashes—mean that cabbage reduces menopausal symptoms? No, it simply indicates that short-term cabbage consumption was associated with fewer reported hot flashes in some women. Small, short-term studies have value, but they don't establish definitive cause and effect.

The same goes for mechanistic explanations: just because a process is observed in a lab or at the cellular level doesn't mean it translates to real-life outcomes. Real life is multifactorial and can significantly alter how things play out.

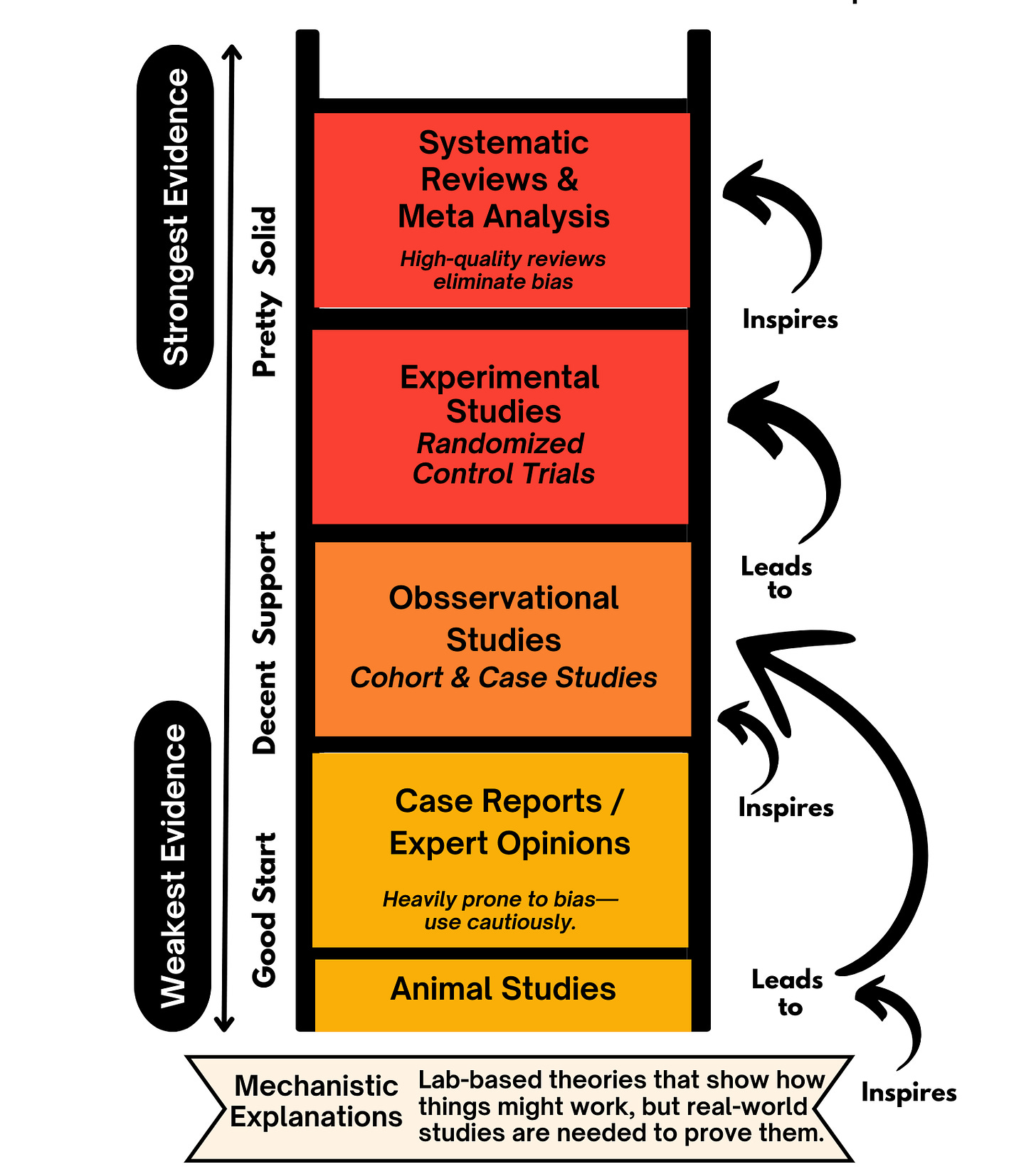

Climbing the evidence ladder

Reliable research comes from studies at the top of the evidence hierarchy. Of course, the researcher matters too. What many outside the small circle of nutrition researchers don’t realize is that there are experts who specialize in particular subjects. For example, if you have questions about processed foods, look for studies by Dr. Kevin Hall; for food policy, consider the work of Dr. Marion Nestle.

Nutrition science isn’t a free-for-all—it’s a ladder, where each step builds on the one below it.

At the bottom, we have animal studies—useful for spotting biological clues, but remember, mice aren’t humans.

Then come case reports, essentially medical anecdotes—interesting, but not proof.

Next, observational studies scan large populations to identify patterns (e.g., people who eat nuts tend to live longer). They can’t prove cause and effect, but they lay the groundwork for deeper investigation.

That’s where randomized controlled trials (RCTs) come in. These are the gold standard for testing if something actually causes an effect by controlling variables and comparing groups.

At the top? Systematic reviews and meta-analyses, which compile multiple studies—filtering out the noise to give us the clearest, most reliable conclusions.

But here’s the twist: mechanistic explanations often try to leapfrog the ladder. They sound logical—like saying grapefruit’s acidity burns fat—but without real-world studies to confirm, they’re just hypotheses, not proof.

What’s Not on This List?

Your friend’s brother’s hot take, influencer opinions (no matter how passionately they swear it works), unqualified podcasters, anyone claiming they know it all with absolute certainty—and people who say they “googled it.”

So next time someone confidently says, “Research shows…” ask them to show you the study and then ask yourself: where does that research land on the ladder? Because not all evidence stands on solid ground.